Alright… you finally have data in your data warehouse, and now the fun begins! But wait... building data models directly in Snowflake UI doesn’t scale well. It might sound easy—open up a worksheet, build your SQL, create your final model, and push it to production—but what about lineage (which Snowflake is working to improve), different environments, collaboration, and version control? This is impossible to manage solely in the UI. Let’s bring dbt into the picture.

By now, we’ve all heard of dbt, whether we’re using it in small personal projects, self-hosting dbt Core in production, or using dbt Cloud at scale. So, we’ll skip the introduction on what dbt is and instead dive into the deployment options. How do we decide which approach is best for our organization? As always, it’s never a one-size-fits-all. We’ve created three scenarios to help you decide between self-hosted dbt Core, dbt Cloud, or a hybrid solution. Let’s begin:

Is your team data engineering-heavy? Do you have DevOps or platform engineering experience within the organization that can be leveraged? Are your analytics engineers comfortable with the command line? Does your organization have very specific security requirements that need to be met? If so, self-hosting dbt might be the right choice.

Here’s your recipe:

Self-hosting dbt Core gives you the flexibility and extensibility required to meet your workload needs. Deploy within the guardrails of your cloud platform and include it as part of end-to-end ELT workflows. You have full control here, but this comes with the responsibility of ongoing maintenance and monitoring.

Additionally, if you’re reusing internal infrastructure or talent, this approach could be your most cost-effective option. Be sure to incorporate Slim-CI into your pipeline to reduce costs.

On the other hand, perhaps your analytics engineers enjoy the benefits of developing locally, but the wider organization lacks the resources to self-host dbt. In this case, a hybrid solution could work very well. You can develop locally and deploy and schedule through dbt Cloud.

This approach is great for scenarios where a GUI isn’t the preferred developer interface, but self-hosting requirements can’t be met. It’s also a good fit for larger teams where members work on models simultaneously.

Here’s your recipe:

While a dbt Cloud-native approach can present collaboration challenges if not properly managed (for example, you can’t lock branches in the UI, so multiple developers may work on the same branch simultaneously), you can address this by including a GitHub action that runs an API cloud job depending on your branching strategy and CI/CD process.

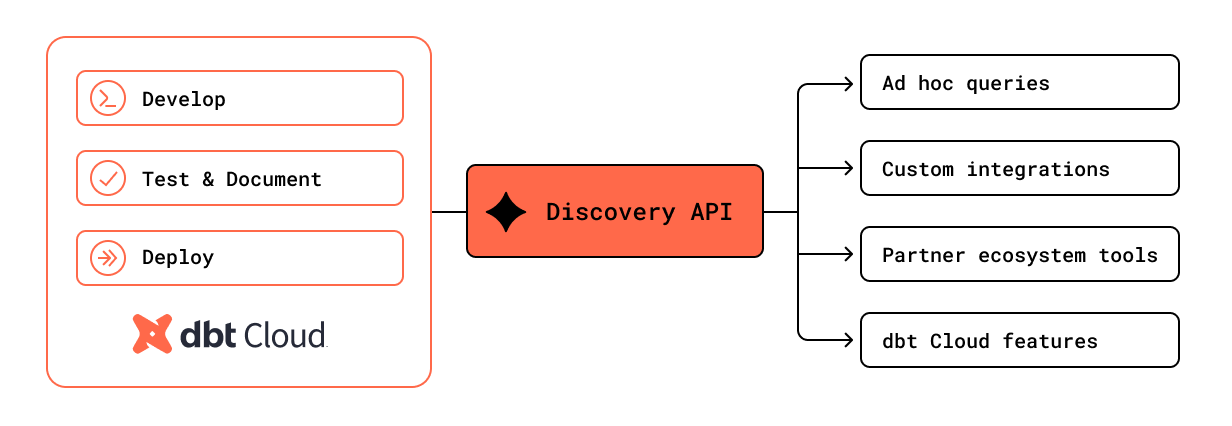

Finally, dbt Cloud is an excellent option for teams that don’t want to worry about orchestration but also want to take advantage of many extended features available out of the box, such as:

Leveraging dbt Cloud is the quickest and easiest way to get started. You only need to worry about developing your models while dbt Cloud handles the rest. Plus, dbt docs and the semantic layer easily integrate the rest of your non-analytics staff, enabling wider adoption of the tool.

As you can see, there is no one-size-fits-all solution. Each organization’s circumstances are different, so base your decision on your current and future internal setup. We’ve worked with all three approaches, and each deployment has its place within the modern data stack.

As always, if you need help setting up this infrastructure, please contact us so we can get you started.

Stay tuned: In the coming weeks, we’ll launch our open-source series, where we will focus on our internal setups across a wide range of topics, including ELT, DataOps, data transformation, and more. We’ll also dive deeper into our self-hosted dbt solution, which offers two different methods for self-hosting your dbt project.